Bots are everywhere on the internet — some helpful, others harmful. While search engine bots like Googlebot crawl your website to index content, malicious bots may scrape data, overload your server, or affect SEO negatively.

Fortunately, you can control how bots interact with your site using a simple file called robots.txt. In this guide, we’ll show you how to block bots using robots.txt file in cPanel — step by step.

For more web hosting tips and security guides, visit the Hostrago Knowledge Base.

What is robots.txt?

The robots.txt file is a plain text file located at the root of your website that provides instructions to web crawlers (bots) about which pages or directories they are allowed or disallowed from accessing.

Example:

User-agent: *

Disallow: /private-folder/

In the example above, all bots are blocked from accessing /private-folder/.

💡 Note: Not all bots obey robots.txt. Malicious bots may ignore these instructions, so additional server-level security may be needed for full protection.

Why Block Bots Using robots.txt?

Here are a few reasons you might want to restrict bots:

- Reduce server load caused by excessive crawling.

- Protect sensitive directories from being indexed.

- Prevent content scraping.

- Improve crawl budget for SEO by prioritizing important pages.

- Block unwanted bots that do not add value to your site.

How to Block Bots Using robots.txt File in cPanel (Step-by-Step)

Step 1: Log In to Your cPanel Account

- Visit your cPanel login URL.

- Enter your username and password.

- Click Log In to access your dashboard.

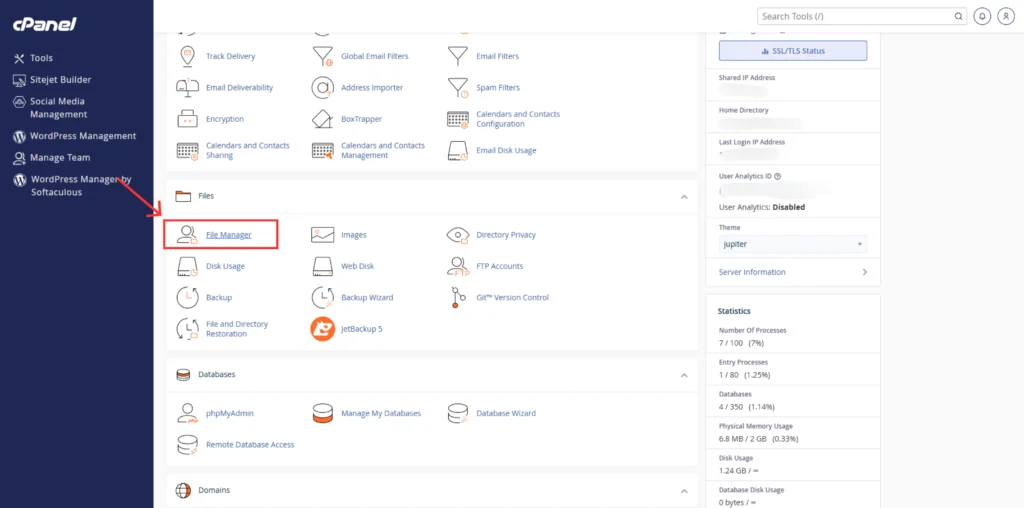

Step 2: Access the File Manager

- Under Files section, click on File Manager.

- Navigate to the root directory of your domain, typically:

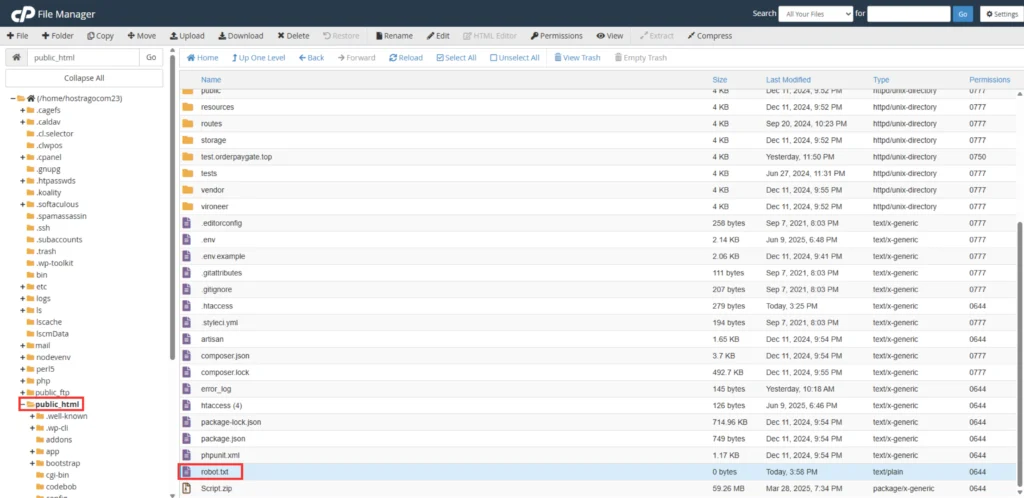

Step 3: Check for Existing robots.txt File

- Look for the

robots.txtfile in the root directory. - If it exists, you can edit it.

- If it does not exist, you will create a new file.

Step 4: Create a New robots.txt File (If Needed)

- Click on + File at the top-left corner.

- Name the file

robots.txt. - Click Create New File.

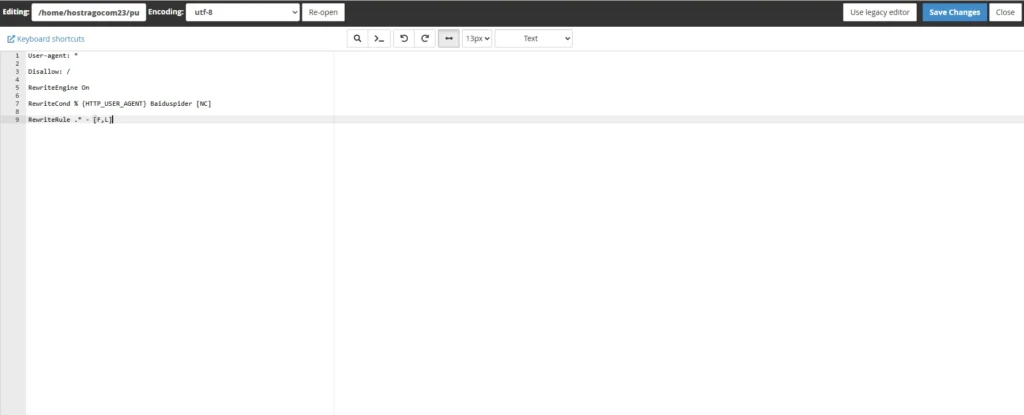

Step 5: Edit the robots.txt File

- Right-click on

robots.txtand select Edit. - Enter your bot blocking rules.

For example, to block all bots:

User-agent: *

Disallow: /

- To block a specific bot, such as AhrefsBot:

User-agent: AhrefsBot

Disallow: /

- To allow all bots but block specific directories:

User-agent: *

Disallow: /private-folder/

Disallow: /temp/Step 6: Save Changes

- After adding your rules, click Save Changes.

Your new bot blocking rules are now active.

Additional Tips for Managing Bots

- ✅ Use

robots.txtcarefully — blocking important pages may affect SEO. - ✅ Combine

robots.txtwith other security measures like IP blocking or firewall rules. - ✅ Use Google’s robots.txt Tester to verify your file.

- ✅ For sensitive data, use authentication and server-level restrictions — not just

robots.txt

How to Verify Your robots.txt Is Working

- Visit

https://yourdomain.com/robots.txtin your browser. - Review the file to ensure your rules are correctly applied.

- Use webmaster tools (like Google Search Console) to test your robots.txt.

Need Help? Contact Hostrago Support

If you need assistance managing your robots.txt file or optimizing your hosting security, our support team is available 24/7.

👉 Contact Hostrago Support

Or explore our Hostrago Knowledge Base for more helpful guides.

Frequently Asked Questions (FAQ)

1. What is robots.txt?

The robots.txt file is a text file placed at the root of your website that gives instructions to web crawlers (bots) about which parts of your site they are allowed or disallowed from accessing.

2. Why should I block bots using robots.txt?

Blocking bots using robots.txt can help:

- Reduce server load from unnecessary crawlers.

- Prevent content scraping.

- Improve SEO by focusing search engine crawlers on important pages.

- Block specific bots that may harm your website performance.

3. Can all bots be blocked using robots.txt?

No. While most legitimate bots (like Googlebot, Bingbot) follow robots.txt rules, some malicious bots may ignore these instructions. For full protection, you may need additional server-side security like firewalls, IP blocking, or bot management solutions.

4. Where should I place the robots.txt file?

The robots.txt file should be located in your website’s root directory, typically:/public_html/robots.txt

This allows bots to find and read the file when crawling your domain.

5. How do I block all bots from my website?

To block all bots from accessing your website, add the following lines to your robots.txt file:

User-agent: *

Disallow: /

This tells all bots not to crawl any part of your website.

5. Can I block specific bots only?

Yes. You can target specific bots using their user-agent names.

For example, to block AhrefsBot:

User-agent: AhrefsBot

Disallow: /

This allows you to block individual bots while still allowing others.

7. Does blocking bots with robots.txt affect SEO?

Blocking good bots like Googlebot can negatively impact SEO because your content won’t be indexed. It’s recommended to only block bots that are unnecessary or harmful to your website.

8. How can I test if my robots.txt is working?

You can test your robots.txt file using Google Search Console’s robots.txt Tester to ensure it’s properly configured.

9. Can I edit or update robots.txt anytime?

Yes. You can edit the robots.txt file in cPanel anytime. Changes take effect as soon as the file is saved and crawlers visit your site again.

10. Where can I find more cPanel guides?

You can visit our full Hostrago Knowledge Base for more detailed cPanel tutorials, security guides, and web hosting tips.

Conclusion

How to Block Bots Using robots.txt File in cPanel is a simple yet powerful way to control how bots interact with your website. Whether you’re blocking harmful bots or fine-tuning your SEO, proper configuration of this file can save server resources and protect your content.

👉 For more tutorials, visit our Hostrago Knowledge Base.

👉 Ready for secure and reliable hosting? Check out our Web Hosting Plans.